One Year In: Driving Zixi Forward with the Three Ps – Pricing, Partners, and Politics

Marc Aldrich, CEO, Zixi

Nearly a year into my role as CEO of Zixi, I’ve spent much of this time listening—listening to customers, partners, and the broader industry. From that, three priorities have guided our path forward: Pricing, Partners, and Politics. Each has been central to repositioning Zixi as the leading enabler of live video delivery over IP.

Pricing: Simplifying Engagement, Driving Outcomes

When I joined, customers praised our technology but found our business model complex. Pricing and packaging weren’t always clear, making it harder for organizations to engage fully with us.

We’ve since simplified our offerings into straightforward solutions aligned with real-world use cases—from sports broadcasters regionalizing feeds, to cloud playout providers scaling globally, to enterprises delivering corporate video. This shift ensures pricing is predictable, transparent, and aligned to business outcomes—with clear metrics such as per event, per channel, or per affiliate/station.

This clarity doesn’t just make invoices simpler—it enables deeper partnerships. Customers now start conversations not with “what does it cost?” but with “what can we achieve together?”

At IBC, we’ll showcase how technology innovation underpins this work: zero latency frame thinning, expanded encoding, and a mobile contribution app. These features solve problems in practical ways—and our new pricing ensures customers know exactly how to leverage them.

Partners: Building an Ecosystem for Shared Success

Zixi has always had strong relationships, but they weren’t always structured for mutual success. Over the past year, we’ve reinvigorated our partner ecosystem with a focus on interoperability and joint value creation.

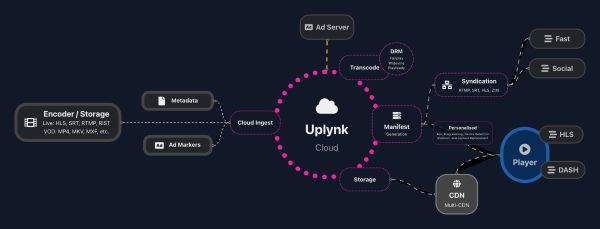

Today, partners are not add-ons but multipliers of impact. Whether through interoperability with Harmonic, co-innovation with Amagi, or service delivery with Uplynk and Encompass, our aim is the same: reduce friction for customers and drive measurable outcomes.

The industry is moving away from siloed, proprietary workflows. Customers demand seamless interoperability—and Zixi enables it. Supporting 13 different protocols, including SRT, RIST, HLS, and our own, Zixi is the connective tissue powering the future of IP video delivery.

The results are clear: faster feature velocity, stronger solutions, and deeper trust with customers who increasingly view us as a long-term business partner.

Politics: Preparing for a Changing Landscape

Technology doesn’t exist in a vacuum—the political and regulatory environment is reshaping the industry.

The upcoming sunset of C-band satellite spectrum is accelerating the shift to IP delivery. At the same time, the U.S. administration’s “Big Beautiful Bill” is investing heavily in broadband infrastructure, opening new markets but also increasing scrutiny on how video is transported, monitored, and monetized.

Zixi is positioned to be the bridge from satellite to IP—helping customers transition smoothly while ensuring compliance and efficiency. We support existing standards like SCTE 224, SCTE 35, and ESAM, while also innovating with tools like POIS (Placement Opportunity Information Service) to unlock new monetization opportunities.

Our approach is not about lobbying but about aligning technology with real-world frameworks, so customers are prepared for what’s next.

Looking Ahead: Enabling the IP Era

Across Pricing, Partners, and Politics, the common thread is clear: Zixi enables the industry’s transition to IP.

At IBC we’ll demonstrate this mission in action:

- Zero latency frame thinning for smoother streaming.

- Modernized Broadcaster interface and ESNI management tools.

- A mobile contribution app that turns any device into a Zixi encoder.

- Integration with Time Addressable Media Store for fast live clipping.

These aren’t just features—they signal a clear direction: Zixi as the platform of choice for delivering live, high-value video anywhere in the world.

Year One Reflections

A year ago, Zixi had world-class technology but untapped potential in how it was positioned. Today, through the Three Ps, we’ve simplified engagement, rebuilt partnerships, and aligned with the realities shaping our industry.

The journey is ongoing, but the path is clear. With transparent pricing, a reinvigorated ecosystem, and forward-looking strategies, Zixi isn’t just adapting to the future of media distribution—we’re helping define it in close alignment with our customers and partners.