NETGEAR – Bridging Broadcast and Pro AV: Using IPMX to Enable Smarter, Simpler IP Workflows

Gus Marcondes, Global Technical Training Manager, NETGEAR

Media over IP has moved from concept to reality in both broadcast and Pro AV. This shift has been driven by vital benefits, including the flexibility to design around the needs of the application, the scalability to grow without replacing entire systems, and the cost efficiencies that come with running over standard network infrastructure rather than dedicated point-to-point cabling.

Despite both industries’ embrace of IP, interoperability remains a challenge, especially when systems rely on proprietary platforms that can’t talk to one another. Open standards directly address this problem, enabling devices from multiple vendors to work together and giving organizations long-term protection for their investments.

Adapting Broadcast Standards for Pro AV

The broadcast sector’s move to IP accelerated in 2017 with the publication of the first SMPTE ST 2110 standards for transporting professional media over managed IP networks. ST 2110 defines how video, audio, and ancillary data — “essences” — are carried in sync over IP. Robust, scalable, and extensible, ST 2110 has been widely adopted in broadcast plants around the world, and it forms the foundation for IPMX (the IP Media Experience).

IPMX is a growing set of technical recommendations based on ST 2110 but tailored for Pro AV. Developed by AIMS in collaboration with VSF, AMWA, SMPTE, and others, it retains the proven ST 2110 transport architecture and NMOS control plane while adding features essential to AV deployments. These include EDID handling and HDCP 2.3 for HDMI workflows, flexible codec options from uncompressed to high-compression, and the ability to operate with or without Precision Time Protocol (PTP).

Technically, IPMX supports multicast and unicast over UDP/RTP on links from 1 GbE to 100+ GbE, video resolutions from SD up to 32K, JPEG-XS or mezzanine inter- and intraframe CODECS as well as support for uncompressed video, USB/KVM, CEC, serial, and GPIO control extensions, and AES67-compliant audio alongside consumer formats. In certain scenarios, it produces less network overhead than ST 2110, easing integration into IT-managed networks.

By making PTP optional and offering lighter-weight configurations where appropriate, IPMX lowers the barrier to entry for AV teams. It allows them to deploy robust, standards-based transport without taking on the full complexity and cost of a broadcast-grade ST 2110 network while keeping the option to integrate with one when the need arises.

Bridging Broadcast and AV With Hybrid Workflows

Broadcast and Pro AV each have their own priorities and operational requirements. Broadcast engineers expect frame-accurate synchronization and uncompressed signal paths; Pro AV integrators often optimize for quick installation, flexibility, and mixed compression workflows. Full convergence of these domains is neither inevitable nor necessary. The more valuable goal is compatibility — the ability to connect systems where there’s a clear operational or business need.

IPMX is well suited to this role. Because it is based on ST 2110, it can integrate directly into a broadcast plant. At the same time, its simplified timing and flexible compression make it practical for AV environments where a pure ST 2110 deployment would be overkill.

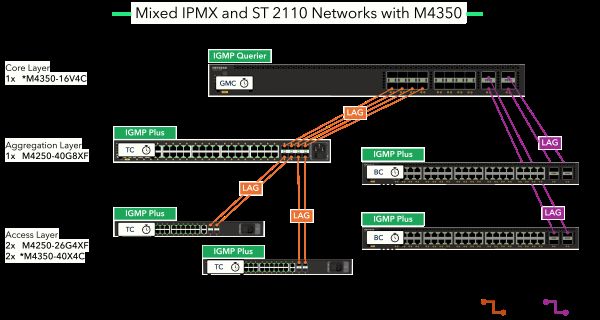

Hybrid workflows that mix IPMX and ST 2110 are becoming more common in multipurpose venues, sports facilities, and corporate studios with broadcast capabilities. In these scenarios, IPMX can serve as a cost-effective bridge, reducing infrastructure duplication. A single network can support AV operations for in-venue displays and presentations while also linking into a broadcast chain for live event coverage.

This selective integration means infrastructure investments deliver more value. The same cabling and switching fabric can carry both worlds, with IPMX handling AV tasks efficiently and ST 2110 taking over when broadcast-grade performance is required.

Designing for Multiple Protocols

In real-world deployments, IPMX will rarely be the only protocol on the network. Many organizations also run proprietary formats such as NDI for video or Dante for audio, alongside open standards like AES67. A practical media network must be able to carry them all.

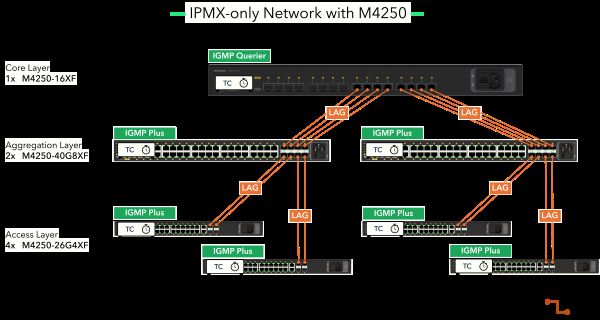

Designing for multi-protocol readiness starts with the fundamentals: VLAN segmentation, multicast filtering, QoS prioritization, and — where needed — the precision of PTP timing. IPMX-only networks can often operate with more relaxed timing requirements, while hybrid IPMX/ST 2110 networks will need synchronous and asynchronous domains to coexist. Configuration profiles can make this easier for engineers and integrators, allowing them to apply a tested set of parameters for each protocol rather than start from scratch.

Management software plays an important role here. The ability to configure VLANs, multicast behavior, PTP, QoS, and link aggregation through a centralized interface not only speeds deployment but also ensures consistency across devices and sites. This simplifies expansion and allows teams to add new protocols without major redesigns.

Technology alone isn’t enough. As AV and IT domains continue to overlap, cross-trained teams are essential. AV technicians now need an understanding of network architecture, while IT professionals benefit from familiarity with media transport standards. This convergence of skill sets improves troubleshooting, accelerates adaptation to new technology, and fosters collaboration across departments, all of which directly improve the reliability and efficiency of media-over-IP systems.

Ensuring Flexibility and Longevity

IPMX extends proven broadcast standards into Pro AV with features that make those standards easier to deploy, more adaptable, and more cost-effective. It can serve as a bridge to ST 2110 in hybrid environments, or as a stand-alone AV-over-IP approach in installations that don’t need a full broadcast infrastructure.

A well-designed network ideally can carry any IP-based media protocol, whether open or proprietary, without bias. This approach maximizes integration options, streamlines upgrades, and protects against disruptive shifts in technology or vendor strategy.

Media organizations and integrators don’t have to figure out AV over IP on their own. Vendors like NETGEAR offer switching platforms and profile-based management that support multiple protocols with equal ease, plus free pre-sales consulting and training to help teams design, deploy, and maintain these networks. With access to the right tools and expertise, engineers across broadcast and Pro AV can build networks to handle any IP-based media protocol, thereby positioning their organizations to adapt smoothly as their technical and business requirements evolve.