Remote production has revolutionized the way video content is created and delivered in the modern era of digital streaming. With advancements in technology and connectivity, the concept of remote production has gained immense importance and popularity. By enabling teams to collaborate and produce high-quality video content from various locations, remote production offers unparalleled flexibility, cost efficiency, and scalability. From live events and sports broadcasts to film and television productions, remote production has emerged as a game-changing solution that maximizes efficiency, reduces expenses, and ensures seamless content delivery to audiences worldwide.

5G technology offers broadcasters unparalleled opportunities to deliver high-quality video content. By providing greater bandwidth, lower latency, and improved quality of service, 5G has made it possible for broadcasters to take their remote production capabilities to the next level. However, deploying and maintaining these services is still a challenging task that requires significant testing, troubleshooting, and debugging to identify playback issues and qualify devices and streams.

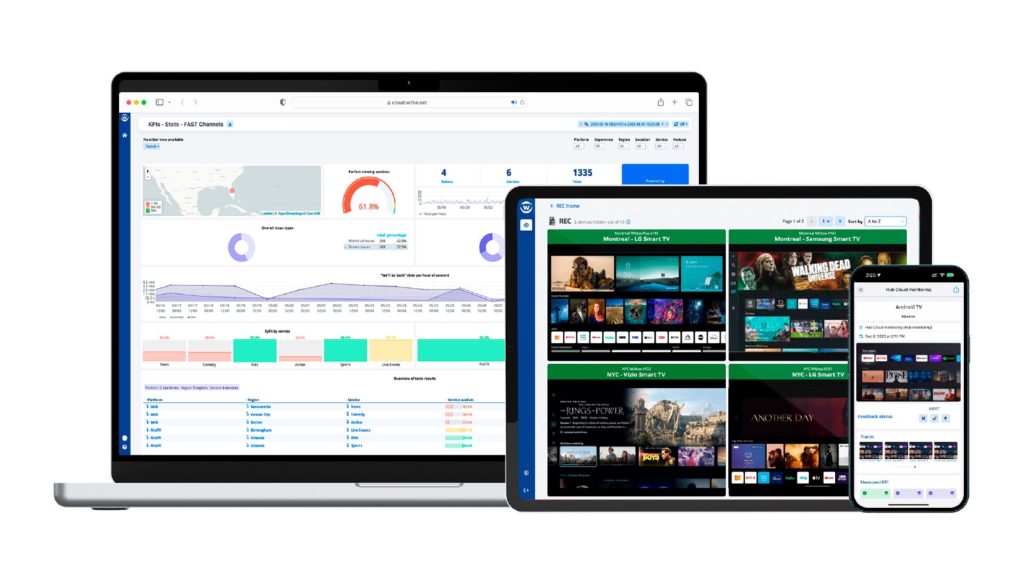

VisualOn, a leading provider of video streaming solutions with a global presence in research and development, operations, and customer support, understands the complexities involved in delivering high-quality video services. The process of assembling the necessary hardware, streams, and personnel in one location can be expensive and time-consuming. To address this, VisualOn has introduced the Remote Lab project, which is designed to enable remote teams to access physical devices with ease. This project combines software and hardware solutions to provide optimized audio, video, and multimedia applications.

The Remote Lab project solves the challenges associated with remote production by providing a streamlined solution for managing disparate teams, devices, and content. With ultra-low latency, this solution ensures that streaming service providers can offer a consistent quality of service across all viewing devices. This is particularly important given the growing demand for high-quality video content across a range of platforms, including smartphones, tablets, and smart TVs.

Moreover, with Remote Lab, streaming service providers can ensure that their content is tested and verified before it is released to viewers. This helps to prevent playback issues and ensures that the content meets the high standards of quality that viewers expect. Additionally, Remote Lab provides an efficient solution for training remote teams, enabling them to work together seamlessly and deliver exceptional results.

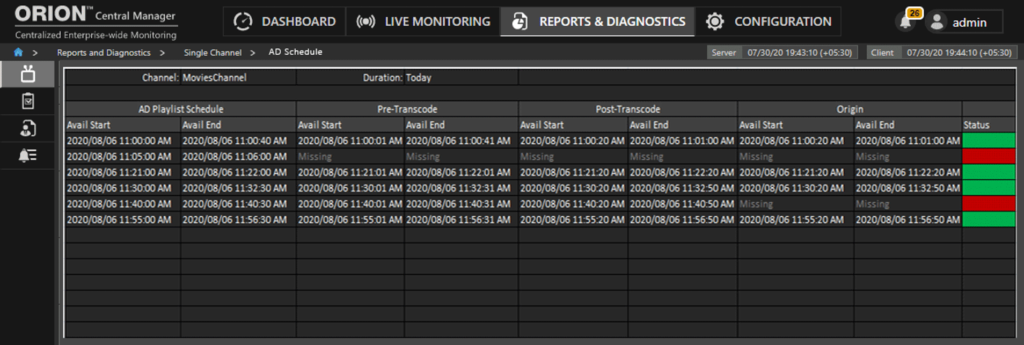

VisualOn Remote Lab allows for real-time remote testing and debugging, in-house research and development, and video quality assurance. It offers a single web page access to video feed and control, low latency configurations, immediate Android ADB execution with video feed, and full remote control functionality. Additionally, it supports mouse and keyboard controls, recording and playback of tests, and full-screen viewing. Additional benefits include:

- Real-time Remote Test and Debug. Bring together distinct teams, devices, and streams to find and resolve playback issues at high frame rates.

- In-house Research and Development Center. Leverage remote resources to develop and test new services with effective cost

- Remote Control Room. Set up separate lab instances in different locations to monitor from a central control room

- Robotic Process Automation. Quick automated testing without needing to write testing scripts

- Supports a Broad Spectrum of Video Platforms. TVOS and Android devices, set-top boxes, smart TVs, web browsers, and other streaming devices

- Using the mouse to scrub through the content in the real-time

Remote Lab is a game-changing solution for remote production that streamlines the process of deploying and maintaining high-quality video services. By enabling remote teams to access physical devices easily, managing diverse teams, devices, content becomes effortless, and streaming service providers can offer a consistent quality of service across all viewing devices.

Check our latest interview with IABM TV. This session discusses the innovative solution known as VisualOn Remote Lab, highlighting its benefits for OTT video service providers during this period of increased video consumption. IABM TV Interview>