CaptionHub – From on-demand to live: how AI is transforming global video localization

The future of video accessibility and localization

As global content consumption rises, accessibility and localization are no longer just technical considerations. They are essential for audience engagement, brand expansion, and compliance with emerging regulations. Whether it is a corporate announcement, a product launch, or a global live stream, audiences expect seamless, high-quality captions and voiceovers in their preferred language.

Traditionally, localization efforts have focused on video-on-demand. Subtitles and translated voiceovers have long been critical tools for making content accessible to international audiences. But a major shift is happening. Live accessibility, once considered too complex or expensive to implement at scale, is now becoming a priority. Companies that have successfully integrated localization into their on-demand content strategies are beginning to realize the same approach must extend to live events.

The cost of poor accessibility and localization

Failing to prioritize accessibility carries financial, reputational, and legal risks. The European Accessibility Act, set to take effect in 2025, will require companies to make digital products and services, including live and streaming content, fully accessible. In North America, accessibility laws continue to evolve, placing increasing pressure on organizations to comply.

The consequences of non-compliance can be significant. FedEx, for example, was sued by the U.S. Equal Employment Opportunity Commission (EEOC) for violating the Americans with Disabilities Act (ADA) after failing to provide ASL interpreters and closed captioning for employee training videos. The case resulted in a $3.3 million settlement and a mandate to implement ASL interpretation and closed captions across its training materials.

According to a study by Verizon Media, 80% of consumers are more likely to watch a video to completion when captions are available. According to the Journal of the Academy of Marketing Science, captions have been found to improve brand recall, verbal memory, and behavioral intent. For broadcasters, accurate captions and translations translate to stronger audience retention, greater monetization potential, and a more inclusive viewer experience.

Despite this, live content has often been left behind. Historically, delivering real-time captions and translations was expensive, technically challenging, and difficult to scale. That is now changing.

Live localization: The next evolution in accessibility

While VOD localization has long been a standard practice, live accessibility is emerging as a critical next step for global brands. Several factors are driving this shift.

Firstly, broadcast and content production which is traditionally very hardware, racks and stacks of encoders, MAMs and an array of other technologies, is now genuinely adopting cloud across the industry. This brings the opportunity to re-canvas and re-imagine entire workflows and opportunities.

Secondly, audiences now expect the same level of accessibility in live content as they do in pre-recorded media – and in their own language. As enterprises invest more in live video for internal communication, marketing, and industry events, the need for real-time multilingual captioning and translation has never been greater.

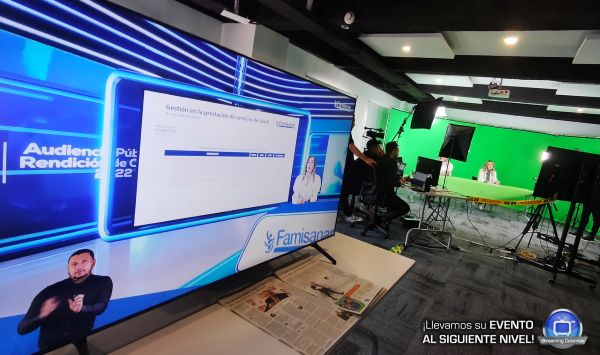

Technology is enabling a new level of efficiency and scalability. CaptionHub Live is at the forefront of this transformation, providing perfectly synchronized, AI-powered captions for live broadcasts in 55 source languages and 138 translated target languages. It integrates directly into video players or streams, without modifying the underlying broadcast stream, ensuring delivery with minimal points of failure, ultra-high levels of resilience and flexibility for most deployment environments.

This shift is already playing out in high-profile industry events. AWS re:Invent 2024, one of the world’s most influential cloud computing conferences, selected CaptionHub Live to deliver real-time captions in 15 languages across 51 countries. Partnering with live stream service provider Corrivium, CaptionHub integrated seamlessly with Dolby and THEO Technologies to provide highly accurate, scalable accessibility solutions. This is one of several high profile events in that CaptionHub has underpinned since its launch in Q4 2023.

AWS is one of many organizations recognizing the value of live localization. As brands continue to expand their global reach, ensuring accessibility for live audiences is no longer an afterthought but a core requirement for competitive advantage and audience growth.

AI and the future of multimedia localization

AI is fundamentally reshaping how organizations approach localization. The demand for automated captioning, translation, and AI-powered voiceover is increasing as companies look for scalable ways to engage multilingual audiences.

The most effective solutions combine automation with human-level quality control. AI can generate highly accurate translations and captions in real time, but human oversight remains essential for ensuring advanced cultural nuance, tone, and brand consistency.

Voiceover automation is another area seeing rapid innovation. Traditionally, multilingual voiceovers required time-intensive manual recording, limiting scalability. But AI-powered solutions are transforming this process. At NAB 2025, CaptionHub will launch its brand new voiceover editor, designed to streamline voiceover production with precise pronunciation controls, advanced timing adjustments, and automated multilingual speech synthesis. This will allow enterprises to localize content with greater accuracy and efficiency, ensuring brand messaging is consistent across languages.

As enterprises move toward a more global-first approach to content creation, they need solutions that can scale across both live and on-demand media. The shift from manual processes to AI-powered automation is not just about speed. It is about enabling deeper audience engagement, maintaining brand integrity across languages, and ensuring accessibility at every stage of content production.

Why multilingual accessibility is more important than ever

Consumer behavior is shifting rapidly toward multilingual content consumption. A 2024 report by Ampere Analysis found that more than half of internet users in English-speaking markets regularly watch non-English content. Additionally, businesses that invest in localization see increased engagement and revenue growth as audiences prefer content in their native language.

For organizations relying on video for communication, marketing, and education, multilingual accessibility is no longer optional. The ability to deliver high-quality captions and voiceovers efficiently, at scale, and in real time is becoming a defining factor in how brands engage their global audiences.

CaptionHub is setting the standard in this space. With AI-powered solutions that integrate seamlessly into both live and on-demand workflows, the platform is transforming how brands reach international audiences. The industry-wide adoption of AI-driven localization reflects a broader shift. Companies that prioritize accessibility today will not only meet regulatory requirements but also build stronger, more engaged communities around the world.

The question is no longer whether businesses should invest in localization. It is how quickly they can scale it to meet the expectations of an increasingly global and digital-first world.