TMT Insights – CapEx vs. OpEx in Media: Finding the New Balance

Andy Shenkler, CEO, TMT Insights

The ongoing debate between CapEx and OpEx isn’t new within the Media and Entertainment Industry. The recent surge in streaming platforms and cloud-based workflows pushed many organizations toward flexible, pay-as-you-go OpEx models. Yet, as the industry evolves, companies are increasingly concerned about EBITDA, investor scrutiny, and market pressures. In today’s environment of high interest rates and limited growth, monthly OpEx charges weigh heavily on EBITDA, driving renewed interest in CapEx strategies that provide capitalization and depreciation benefits.

Where the Shift Started

The urgency of content production, localization, and participation during the “streaming wars” drove rapid decisions. As media supply chains shifted from on-premises to cloud-based models, companies had to rethink organizational structure, pricing, sales strategies, skill sets, and team dynamics to adapt to cloud and AI technologies.

With fast cloud adoption, SaaS and monthly OpEx became the norm—supported by low interest rates and affordable debt, making on-demand flexibility appealing amid unpredictable growth.

Eventually, the industry leveled off, shifting focus back to financial control and strategic planning. Balancing innovation with financial stability became essential.

Finding a Balance

A stronger focus on cost control and profitability has led to tighter financial scrutiny and a renewed effort to manage expenditures—essentially a “re-tightening” after years of loosen practices.

Organizations of all sizes are now working to better optimize financial forecasting. While OpEx models offer lower upfront costs and greater flexibility, they also challenge companies trying to meet EBITDA targets. Businesses must balance financial models with the reality that tools and software often last well beyond the typical three-year depreciation period.

Software is central to this issue. SaaS models shift all costs to recurring OpEx, removing the ability to capitalize software as an asset. Many media companies want to return to ownership when possible. Capitalizing software allows for depreciation, while owning source code or a perpetual license avoids the “hostage model.” This enables internal development or third-party use, offering both predictability and flexibility.

With interest rates potentially declining, particularly in the U.S., companies are increasingly revisiting CapEx strategies. There’s renewed interest in financing methods that support longer-term depreciation schedules. Many are returning to traditional CapEx models, which offer predictable costs and avoid ongoing fees, all while maintaining a cloud presence.

OpEx vs. CapEx in Infrastructure

“Going cloud” is clearly still the key to the M&E industry’s long-term evolution and success. Both small and large media companies, even those who are fully on-premises today, understand the necessity of working with cloud-based technology in the future to unlock benefits of scale, agility, increased collaboration, and advances in machine learning.

Even if a company has clearly built out its cloud roadmap, migrating from current or legacy workflows to a desired future state takes time. Optimizing cloud environments in development for efficiency and cost is a critical component.

It’s also crucial to remember that costs incurred from working in the cloud do not go away on-premises. They convert from OpEx (e.g., monthly storage and compute) to CapEx (and additional OpEx in the form of data center operating costs such as maintenance, cooling, etc.).

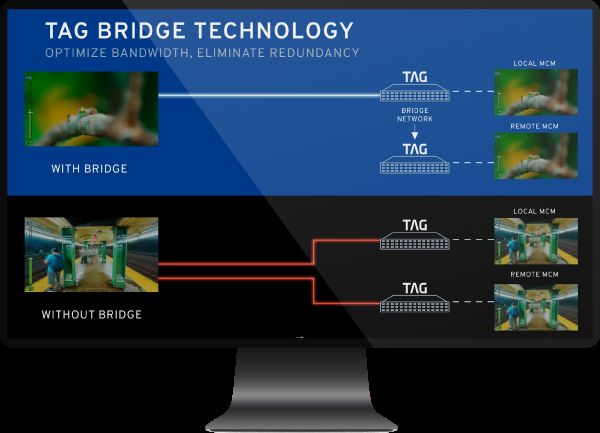

Infrastructure tells a more nuanced story. For compute-intensive, bursty workloads like streaming spikes, AI, rendering, or temporary live events, OpEx flexibility is invaluable. But storage as one example can be different. The elasticity of OpEx storage often becomes a proverbial “junk drawer” where poorly indexed assets, labeled “Do Not Delete,” create a hoarding condition which only succeed in accumulating indefinite costs. Fixed CapEx storage—or reserved capacity that mimics it functionally—forces discipline and predictability. For many firms, why pay perpetual monthly OpEx for fixed, predictable storage when it could be purchased, capitalized, and depreciated as an asset?

A New Frontier: Capitalizing Cloud Infrastructure

This debate also raises the possibility of future economic models whereby cloud providers should explore fractional or ownership-based models for infrastructure. If companies could purchase and depreciate storage or compute in the cloud—possibly with a buy-back or resale market when capacity becomes unnecessary or fully depreciated—it would create an entirely new secondary market for cloud infrastructure, potentially financially assisting multiple parties in this type of transaction. Such models could align the benefits of CapEx (predictability, depreciation, EBITDA preservation) with the scalability of the cloud.

Ownership, Optionality, and Control

Recognizing these struggles was a key principle of how TMT designed our offerings to clients. Offering very flexible commercial structures for both our award winning Polaris operational management platform and the recently announced FOCUS companion solution for unifying decisions across finance, sales, content programming and operations to drive demand signals into the supply chain. In both cases clients can choose to either support a traditional perpetual license with a one-time acquisition cost or spread that cost over time as a subscription model. However unique in the offering is the access to all source code and a deployment model that allows either scenario to be fully capitalized in accordance with GAAP.

Which Model is Right for Me?

There’s no universally “right” or “wrong” choice between CapEx and OpEx models—it depends on a company’s financial strategy, operational needs, and goals.

CapEx suits stable, capital-heavy operations, while agile, scalable workflows often benefit from OpEx. Many in M&E now favor a hybrid approach, combining owned infrastructure with cloud services to balance control, cost-efficiency, and innovation. This allows for CapEx investment in core infrastructure, with OpEx used for scaling, innovation, and remote access.

CapEx is ideal for organizations with consistent production needs, long-term infrastructure use, full control requirements, or available capital—like major studios or broadcasters.

OpEx works well for companies needing fast or temporary scale, such as remote productions or event-based campaigns, and is favored by digital-first or streaming startups that prioritize agility.

Ultimately hybrid models are emerging as the most practical path forward—supporting EBITDA preservation and capitalized assets, while leveraging OpEx for flexibility and growth. The decision is now strategic, influencing agility, competitiveness, and long-term control.