CaptionHub – The shift from hardware to cloud native audience growth

Kirsty McGowan – CaptionHub Director of Marketing

Live captioning has long been a core component of any broadcast workflow.

In recent years, many control rooms have virtualized and some remain hardware based for a myriad reasons from legacy contracts, change management delays or production necessity such as outside broadcast trucks. Remote production is however fast becoming the standard. IP and cloud are everywhere. Yet captioning has often remained stuck with hardware encoders, SDI patching, on-prem boxes, and on-site specialists. We all know the cloud argument – it’s not new – you can genuinely do things faster, at lower cost and better scale. More with less, and today, not tomorrow.

For a long time, that was just accepted as the cost of doing things properly. But audience behavior and business models have moved on. Captioning hasn’t kept up, until now.

Captions as Revenue Infrastructure, Not Just Compliance

Captions used to live in the “regulatory necessity” bucket. Now they sit much closer to “audience growth” and “revenue generation”.

A few simple realities:

- A huge proportion of video is watched with the sound off – especially on mobile and social. If your content isn’t captioned, you’re simply invisible in those environments.

- Captions increase completion rates and watch time on streaming platforms. Viewers stay longer when they can follow along easily.

- Multilingual captions open doors to markets that were previously uneconomical to serve with separate language feeds or fully localized productions.

Add accessibility legislation, platform-level expectations, and the fact that younger audiences treat captions as standard, and it becomes pretty clear: captions now sit in the critical path of content performance and monetization.

If captions drive reach and reach drives revenue, then captioning workflows can’t be a fragile bolt-on anymore. They need to be first-class citizens in the stack.

The “Missing Encoder” Wake-Up Call

This shift came into focus for us during one of the world’s most-watched sports events this year.

Picture a major tournament, broadcast globally, strict timings, and very little margin for error. The production truck arrives onsite. The team begins running through checks and realizes the captioning encoder hasn’t been loaded onto the truck.

Traditionally, that’s a nightmare scenario. The options would have looked something like this:

- Ship in hardware at speed (and at cost)

- Fly in or divert specialist engineers

- Rewire parts of the chain to accommodate a temporary setup

- Accept a high-risk, last-minute, half-tested solution

Instead, the team picked up the phone. They had recently been introduced to Timbra, the real-time localization suite from CaptionHub.

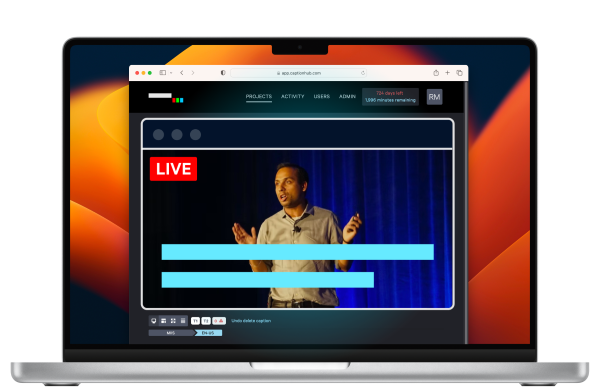

Because Timbra is fully cloud-based and AI-powered, they didn’t need to source a physical kit or re-engineer the signal path. Within hours, non-specialist staff were logged into a browser, running tests, configuring caption styles to fit their brand, uploading brand terms via custom dictionaries to increase transcription accuracy, and validating latency and sync. Translations are a welcome option, simply enabled with a checkbox for 250 more languages.

By the time coverage began, live captions were running smoothly in their stream. Accuracy was strong, timing was tight, and the team had the confidence to let it run across the full event. The feedback afterwards was straightforward: if this is what’s possible in a rush, what could be done with Timbra when it’s part of the plan from the outset?

They’re now assessing how to replace their old approach for future events.

This wasn’t just a “save the day” story. It was a clear sign that the underlying assumption – that serious captioning requires heavy on-site infrastructure – no longer holds.

What Cloud-Native Captioning Actually Changes

AI on its own doesn’t fix captioning. You need the right architecture around it. The real shift comes from moving captioning to a cloud-native, software-driven model that can plug into existing broadcast and streaming workflows.

A few tangible differences:

- No more encoder dependency.Captioning no longer hinges on whether a box made it into the truck. You don’t need a rack full of hardware to deliver compliant, high-quality captions. You just need the right integrations and an internet connection.

- One platform, all use cases.Historically, different environments often meant different solutions: broadcast encoders for linear, something else for streaming, something entirely separate for in-room displays or corporate events.

A cloud-native platform like Timbra can handle:

- Live broadcast captioning

- In-room captions at events and summits

- Captions via companion devices

- Multilingual variants for international feeds

- Enterprise AV use cases like product launches, internal town halls, public-sector briefings and more

- The ability to post captioned VOD content post-live broadcast

The captioning logic, AI models, dictionaries and rules live in one place, even if outputs go everywhere.

3 Multilingual as standard, not a special project.

Real-time translation has reached a point where multilingual captions are viable at scale. With the right language models and controls in place, broadcasters can spin up additional languages without commissioning a completely separate production. This supports:

- Regionalized streams without separate master control

- Parallel markets where English-only used to be the limit

- Enterprise and government comms where multiple languages are mandatory

In revenue terms, each additional language becomes a lever to tap new audiences and advertisers, rather than a major new cost line.

4 Operational control shifts to production teams.

Cloud-native captioning platforms are designed to be operated by production, digital, or events teams – not just engineering specialists. Interfaces are more intuitive, configuration is simpler, and changes can be made on the fly. That doesn’t remove the need for engineers, but it does mean captioning isn’t blocked by a handful of people.

5 Better fit with sustainability targets.

Removing hardware from the chain isn’t just a convenience. Less shipping, less power-hungry kit, and fewer site visits all support sustainability goals that many broadcasters and media organizations have already committed to.

Captioning may be a small part of the overall footprint, but cloud workflows help take friction out of decarbonization.

From Broadcast to Enterprise AV – Same Problem, Same Solution

What’s happening in broadcast is mirrored across all other industries who have for many a political or economic reason, been able to move more quickly: global town halls streamed to thousands of employees in different regions, and enterprise broadcast suites, once laden with hundreds of light-flashing boxes, are now sitting unused or being shut down for remote control production – we’re seeing this in major public institutions to the world’s largest tech companies down to small production teams who have been serving major clients for decades.

All of these share the same constraints: limited specialist resource, dispersed audiences, and a need to move fast without compromising on quality or compliance.

The workflows that Timbra is running in major broadcast environments map directly onto these Enterprise AV scenarios. If a solution can comfortably support a top-tier sports event, it can certainly support a global internal broadcast or product launch.

This is where the unit economics become interesting. One cloud-native localization suite can serve multiple parts of a business and multiple markets, instead of each team buying and maintaining its own rigid solution. Logistics costs down, support costs down, per new region costs down, per stream costs down. And ultimately the per new audience member reach cost significantly down.

What Broadcasters Should Be Asking Now

The technology is here. The question is no longer “is AI good enough?” – live deployments are already proving that it is, especially when combined with model selection, custom dictionaries, and human oversight where needed.

The more relevant questions are:

- Why are we still relying on hardware-heavy captioning chains when software-based cloud workflows are more flexible?

- How many revenue opportunities are we leaving on the table by not offering captions – or multilingual variants – everywhere we distribute content?

- Are we treating captioning purely as a cost, or as part of our audience and revenue strategy?

For many organizations, the opportunity is less about adding something new and more about consolidating what already exists: bringing accessibility, localization, and event workflows into a single environment; reducing duplicated spend; and making it easier for teams across the business to turn captions on when they need them.

That’s ultimately where Timbra by CaptionHub sits: a way to take live captioning and localization out of the “clunky but necessary” category and turn it into a flexible, cloud-based service that supports broadcast, streaming and Enterprise AV alike.

Captioning is no longer the awkward, hardware-bound afterthought at the edge of the signal chain. Done right, it becomes a quiet but powerful revenue growth engine – one browser tab alongside everything else.