Viaccess-Orca – The 5 Pillars of Video Streaming Efficiency: How to Launch Faster, Scale Smarter, and Spend Wiser

Alain Nochimowski, Chief Technology Officer at Viaccess-Orca

For today’s streaming services, operational efficiency is a key differentiator — not in bitrates or compression, but in how platforms are architected, deployed, and scaled. As cloud infrastructure and AI-enriched processes become increasingly pervasive, the competitive focus is shifting from feature innovation to optimizing total cost of ownership (TCO) and building resilient, scalable systems. According to Gitnux, over 83% of streaming providers plan to expand their AI capabilities within two years, aiming not only to enhance viewer analytics but also to reduce operational overhead, such as cutting content moderation times by up to 60%. The ability to manage peak-time upscaling, meet SLAs, and industrialize workflows is now central to sustainable growth.

This shift marks the decline of feature overload. OTT providers are moving away from novelty-driven development and toward platforms that are operationally sound and adaptable to rapid change. Operational efficiency is no longer just a technical goal — it’s a strategic imperative. This requires service providers to rely on vendors proficient with the most modern software development & deployment processes.

- Automation & Modern Deployment

Automating operational processes plays a critical role in improving the speed, reliability, and consistency of streaming platform maintenance. It reduces costs, enables frequent upgrades, and shortens time-to-market, helping platforms respond quickly to user needs and competitive pressures. Just as importantly, automation helps eliminate human error and reduces bottlenecks caused by specialized configuration knowledge, making systems more resilient and easier to scale.

Modern deployment strategies like canary and blue-green rollouts enable incremental releases that minimize risk and ensure service continuity. Canary updates target small user groups, while blue-green setups allow seamless switching between old and new environments. These methods reduce human error and support testing in production — essential for high-availability platforms.

At the heart of these practices is the need to manage software with enterprise-grade rigor. Automation powered by modern Software Delivery LifeCycle (SDLC) frameworks and CI/CD pipelines standardizes deployment, scaling, and maintenance tasks, reducing configuration bottlenecks and freeing engineering teams to focus on innovation. This foundation equips streaming providers to iterate rapidly and deliver new features with confidence.

- Adoption of Cloud-Native Architecture

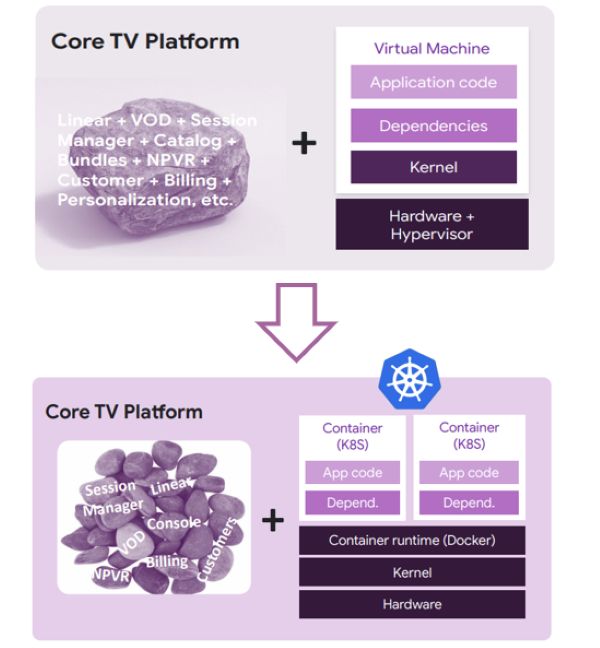

Cloud-native technologies like containers, Kubernetes, and managed services are now standard for building scalable, flexible streaming platforms. Rooted in open-source principles, they support interoperability and evolving cloud architectures. This enables deployment across public clouds, private data centers, or hybrid setups with minimal friction.

Portability makes future migration or hybrid scaling easier, providing the flexibility necessary for handling peak demand — such as live sports — where infrastructure needs can spike. Cloud-native systems scale dynamically, avoiding overprovisioning and maintaining performance under pressure, while helping providers optimize resource use across environments.

- Architectural Balance: Monoliths vs. Microservices

The architectural debate between monoliths and microservices is often framed as a binary choice, but in practice, the most effective streaming platforms adopt a balanced, context-driven approach. Monolithic systems can offer stability and optimized internal flows, making them suitable for tightly coupled high-throughput functions that benefit from centralized control. However, they also come with significant drawbacks: large, intertwined codebases are harder to maintain, slower to evolve, and less conducive to innovation — making them more costly over time.

Microservices, by contrast, offer greater specialization and agility. They allow teams to develop, test, and deploy features independently, which can significantly reduce time-to-market. This is particularly valuable when different business models coexist. For example, transactional and subscription-based content serve distinct audiences and follow different dynamics. Yet microservices also introduce complexity, which can lead to inefficiencies if not carefully managed. A pragmatic hybrid architecture that supports legacy systems while enabling modular evolution often provides the best of both worlds.

Modular architectures support isolated feature updates, reducing testing and accelerating delivery. It also enables granular scalability: instead of scaling the entire platform, providers can scale only the services that are experiencing increased demand, thereby optimizing infrastructure costs. Such architectural adaptability allows platforms to evolve incrementally and respond to changing needs without disruptive overhauls.

- Managing Technical Debt

Technical debt refers to the long-term cost of quick fixes, outdated code, or architectural shortcuts that make future development more challenging. Like financial debt, it accrues interest, which is the extra time and effort required to make changes due to existing flaws. Refactoring takes time upfront but saves time later.

In streaming environments, technical debt can manifest as security vulnerabilities (e.g., CVEs), or non-compliance with evolving regulations like NIS2 (to which certain European operators may be subject). Left unmanaged, it can hinder agility, increase risk, and limit the adoption of new technologies. This challenge is amplified by the growing reliance on third-party (often open-source) software, which creates a complex web of dependencies. Treating technical debt as an ongoing responsibility helps ensure platforms remain adaptable, secure, and ready for future growth.

- Optimizing the Bill of Material (BOM)

Efficiency in streaming platforms extends beyond software architecture, encompassing the entire ecosystem of hardware, infrastructure, and operational components. The Bill of Material (BOM) represents the full cost structure of a platform, including not just the applicable software itself but also servers, networking equipment, cloud services, and increasingly, third-party software components. Viewing the BOM holistically helps organizations understand true ownership costs and identify opportunities for optimization.

To meet expectations for operational efficiency, infrastructure planning must be tightly integrated with software roadmaps. This means evaluating how each component –physical or virtual – is used over time and ensures resources are neither overprovisioned nor underutilized. Strategic planning reduces redundancy and prepares systems for demand spikes. When treated as a strategic asset, the BOM becomes a foundation for smarter investment decisions and more adaptable platforms.

Looking Ahead: A Pragmatic Path to Efficiency

Efficiency will continue to shape the success of streaming platforms. Providers are embracing gradual, evidence-based improvements over sweeping changes, evaluating technologies based on context rather than trendiness.

AI is increasingly accelerating these transformations — streamlining deployment, enhancing observability, and optimizing resource use across the Software Development Lifecycle. As these capabilities mature, providers will iterate faster and adapt more confidently.