Darby Marriott

Product Manager - Production Playout Solutions at Imagine Communications

The Merriam Webster dictionary defines metadata as “data that provides information about other data”. Some call it “bits about bits”.

In the media industry, it is the information which tells you about the video or audio content. And, as such, metadata has been around a lot longer than the computer. If you still have film in your archive, you will expect to find shot lists on a sheet of paper inside each can. And, at the very least, archivists would have a card index to help find things on the shelves.

These manual processes broke down when we moved into storing content on video servers. When the essence is just ones and zeroes spread across a number of disk drives, then you need a database to track files and find them when you need them.

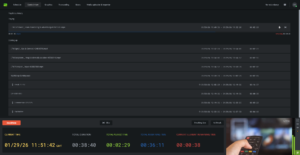

Asset management went hand in hand with broadcast automation, ensuring the programmes and commercials in the schedule were correctly identified for the playlist, and those files were loaded into the playout servers and cued at the right moment.

Today, we demand more from metadata. Schemas have grown ever bigger, with a huge amount of information on each piece of content. Some of this is descriptive, telling you what you have and who was involved; some of it is technical – everything from codec and aspect ratio to camera and lens serial numbers.

In a modern, connected production and delivery center, a large number of people will care about parts of the metadata, but – system administrator aside – no-one needs to know all of it. So the well-designed system will manage the data and the user interfaces to ensure everyone is perfectly informed without being overloaded with information.

To explain what I mean, think about the marketing department of a broadcaster. A great new series is being delivered, and you want to ensure it attracts the biggest possible audience. One of the ways that you do this is by creating a suite of trailers and promos.

The editor charged with the task of creating these promos does not care about any of the technical information – he or she will reasonably assume that the content is fine if it has made it to the live servers. All the editor has is a work order, saying “take our standard trailer format and make a set for this new program.”

The editor may well be using Adobe Premiere and After Effects. Adobe has its own, excellent metadata format, XMP. A smart system design will integrate the overall asset management and playout system with Adobe so that, when the editor opens the work order, the content – from the video server and from the Adobe templates server – is already loaded into Premiere and is ready to go.

When the trailer is completed, the editor or graphics artist initiates the export process. The relevant XMP metadata is wrapped in the file where it is then exposed to the asset management system. This will also handle the generation of house-standard content IDs, and add them to schedules and playlists as needed.

A really smart system will integrate even more data: when to trigger coordinated live events. So rather than the graphic in the trailer just saying “coming soon”, it will say “launches 1 September at 9:00pm”, dynamically linked to the schedule information along with coordinated live DVE sqeezeback upon playback.

This metadata connectivity is all real and happening now. Promo teams use it; and newsroom editors use exactly the same workflow when a news package needs a craft edit with content from the archive.

Any news broadcaster will tell you that the archive is the most valuable asset they possess. Reporting a story is one thing: putting it into the context of what has happened before, what people said in the past, what the consequences of an action are likely to be are all vital.

It is probably in the newsroom, then, that we will see the next big steps in the development of metadata. This will build on the intelligent automation and communication we have today, and augment it with machine learning and artificial intelligence to enhance metadata, with the aim of creating better content by knowing more about what is in the archive.

The pandemic is a good example. Since February 2020, politicians, medical experts, statisticians and citizens have all said a very great deal on the subject of Covid-19. A journalist reporting on a new development today will create a better story if it reflects back on what the parties involved have said in the past.

AI tools are now already available which can create very detailed metadata, completely automatically. The soundtrack can be automatically transcribed, so you can search for what someone said at a given time.

AI can now be applied to video analysis, creating shot lists and detailed information on the content of the clip or programme, with each incident tied to a timecode. This has obvious benefits in the newsroom, where journalists can quickly find exactly the clip they need. But it has wider benefits, too: it is a powerful tool in discovery, whether that is in programme syndication or direct to consumers.

AI can, of course, be run on premises. But it is an obvious cloud use case. The major cloud providers, like Amazon Web Services (AWS) and Microsoft Azure, have their own powerful AI tools which can be trained to your specific requirements. Video and audio analysis is also inherently “peaky”, with long periods of inactivity interspersed with high processor demand when there is new material to be indexed.

Wherever you are in the broadcast and media production and delivery chain, the future of metadata is that it will become ever richer. Automated tools and machine learning will contribute greatly, as will the integration of multiple application-specific databases like Adobe XMP.

Critical to the success in benefitting from all this information is an over-arching integration and management layer. The right tools and the right information must be presented to each individual in the organisation, as they need it, with planned transfer of large blocks of content to minimize the risk of bottlenecks.

The future of metadata – in production and in delivery – will be the enabling technology for orchestrating workflows across every aspect of the operation.